Development guide

Possibilities of Conmo

Conmo framework has been designed to be user-friendly for recreating and evaluating experiments, but also for adding new algorithms, datasets, preprocesses, etc. This section explains the possibilities offered by this framework when implementing new submodules. We believe that using and contributing to Conmo can benefit all types of users, as well as helping to standardise comparisons between results from different scientific articles.

If you still have doubts about the implementation of new components to the framework, you can take a look at the API reference, examples or contact the developers.

Add a new dataset

Dataset is the core abstract class for every dataset in Conmo and contains basic methods and attributes that are common for all datasets.

At the same time, two classes depend on it and differ acording to where the original data is stored:

LocalDataset:Is the abstract class in charge of handling datasets that are stored locally on the computer where Conmo will be running. The main method of this class is

LocalDataset.load(). It’s in charge of parsing the original dataset files to Conmo’s format and moving them to the data folder. It’s an abstract method wich needs to be implemented in every local dataset. There is also an abstract methodfeed_pipeline()to copy selected data to pipeline step folder.

RemoteDataset:In case the dataset to be implemented is originally located on a web server, a Git repository or other remote hosting, the RemoteDataset class is available in Conmo. Among all its methos, it’s remarkable the

RemoteDataset.download()to download the dataset from a remote URL.

For adding a new local dataset to the framework you need to create a new class that inherits from LocalDataset and override the following methods:

LocalDataset.__init__():This is the constructor of the class. Here you can call the constructor of the father class to assign the path to thw original dataset. Here you can also define some attributes of the class, like the label’s columns names, feature’s names. Also you can assign the subdataset that you want to instanciate.

LocalDataset.dataset_files():This method must return a list with all the files (data and labels) that compounds the dataset.

LocalDataset.load():This method must convert all raw dataset files to the appropriate format for Conmo’s pipeline. For each of the datasets, first read and load the data and labels into Pandas dataframes, then concatenate them (e.g. train data and test data will be concatenated in one dataframe, the same for test) and finally save them in parquet format. Some considerations to take into account:

Data and labels dataframes will have at least a multi-index for sequence and time. You can consult more information in the Pandas documentation.

The columns index must start at 1.

If there dataset is only splittered into train and test, then there will be 2 sequences, one per set.

In case the dataset is a time series with sequences, train sequences go after the test sequences.

LocalDataset.feed_pipeline():This method is used to copy the dataset from data directory to the directory Dataset of the experiment.

LocalDataset.sklearn_predefined_split():If you plan to use the Predefined Split from the Sklearn library your class must implement this method. It must generate an array of indexes of same length as sequences to be used with PredefinedSplit. The index must start at 0.

For adding a new remote dataset to the framework the procedure is almost identical to a local dataset. You need to create a new class that inherits from RemoteDataset and override the following methods:

RemoteDataset.__init__():This is the constructor of the class. Here you can call the constructor of the father class to assign the path to thw original dataset. You can also define some attributes of the class, like the label’s columns names, features’s names. , file format, URL and checksum. Also you can assign the subdataset that you want to instanciate.

RemoteDataset.dataset_files():This method must return a list with all the files (data and labels) that compounds the dataset.

RemoteDataset.parse_to_package():Almost identical to

LocalDataset.load().

RemoteDataset.feed_pipeline():This method is used to pass the dataset from data directory to the directory Dataset of the experiment.

RemoteDataset.sklearn_predefined_split():If you plan to use the Predefined Split from the Sklearn library your class must implement this method. It must generate an array of indexes of same length as sequences to be used with PredefinedSplit. The index must start at 0.

Add a new algorithm

Conmo provides a core abstract class named Algorithm that contains the basic methods for the operation of any algorithm, mainly training with a training set, performing a prediction over test, loading and saving input and output data.

Depending on the type of anomaly detection algorithm to be implemented, there are two classes depending on the operation of the method:

AnomalyDetectionThresholdBasedAlgorithm:If your algorithm needs to calculate a threshold to determine which samples are anomalous it must inherit from this class. For example: PCA Mahalanobis.

AnomalyDetectionClassBasedAlgorithm:If your algorithm identifies by classes the normal sequences from the anomalous ones, it must inherit from this class. For example: One Class SVM.

PretrainedAlgorithm:Check out this class if your algorithm was pre-trained prior to running an experiment, i.e. it is not necessary to train it during the experiment. It is required to be able to define the path where the pre-trained model is stored on disk.

For adding a new algorithm to the framework you need to create a new class that inherits from one of these classes depending of the type of the algorithm and override the following methods:

__init__():Constructor of the class. Here you can initialize all the hyperparameters needed for the algorithm. Also you can fix random seeds of Tensorflow, Numpy, etc here for reproducibilty purposes.

fit_predict():Method responsible of building, training the model with the training data and testing it with the test set. In case your algorithm is threshold-based, it will be necessary to verify that each output in the test set exceeds that threshold to determine that it is anomalous. In the case of a class-based algorithm, depending on the output, it will be necessary to identify whether it is an outlier or an anomaly. Finally, the output dataframe has to be generated with the labels by sequence or by time.

find_anomaly_threshold():In case the algorithm is threshold based, the threshold selection can be customised overriding this method.

You can add auxiliary methods for model construction, weights loading, etc. in case the model structure is very complex.

Add a new splitter

The core abstract class is Splitter and provides some methods to load inputs, save outputs and check it the input was already splittered.

For adding a new splitter you must create a new class that inherits from Splitter and implements the method Transform().

If the splitters you want to implement is available on Scikit-Learn library, we provide the class SklearnSplitter and indicating the name of the splitter to be used will allow you to use it in your experiment.

Add a new preprocess

ExtendedPreprocess Class is used for the implementation of new preprocessings in the pipeline. ExtendedPreprocess inherits from the core abstract class Preprocess and provides a constructor in order to define which parts of the dataset will be modified by the preprocessing: labels, data, test or train. Also permits to apply the preprocess to a specyfic set of columns.

To define a new preprocess you only need to create a new class than inherits from ExtendedPreprocess and implements the method Transform(), where the preprocessing will be applied to th datset.

If the preprocess you want to implement is available on Sklearn library, we provide the class SklearnPreprocess and indicating the name of the preprocessing to be used will allow you to use it in your experiment.

In order to make things easier, the CustomPreprocess class is available to implement a preprocessing tool from a function, which will be passed as an argument in the constructor. For additional information you can have a look at the example nasa_cmapps.py.

Add a new metric

You can add a new metric by creating a new class that inherits from the abstract class Metric.

The only method you have to take care is:

calculate():Based on the outputs of the algorithms and the number of folds, the results are computed and the metrics dataframe is created and stored.

CSV dataset import example

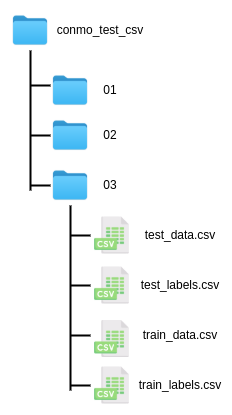

A very common use case that Conmo users may encounter is to add a new dataset that is stored in CSV format. For this case we have developed this small guide, which includes a template as an example. The dataset is stored locally so it will inherit from LocalDataset. It contains three subdatasets stored in different directories, in all of them there are CSV files for data and labels, both for train and test:

The template:

1import os

2import shutil

3from os import path

4from typing import Iterable

5

6import pandas as pd

7

8from conmo.conf import File, Index, Label

9from conmo.datasets.dataset import LocalDataset

10

11

12class CSV_Dataset(LocalDataset):

13 # ------------------------------------------------------------------------------------ #

14 # Define constants here ... #

15 # ------------------------------------------------------------------------------------ #

16 #

17 EX_CONST = 22

18 EX_SUBDATASETS = ['01', '02', '03']

19 EX_COL_NAMES = ['A', 'B', 'C']

20

21 # ------------------------------------------------------------------------------------ #

22 # Constructor of the class #

23 # Call super class constructor to pass path where the raw dataset is stored #

24 # Here you can initialize attributes with passed values #

25 # and the specific subbdataset to be used when instantiating #

26 # ------------------------------------------------------------------------------------ #

27 #

28

29 def __init__(self, path: str, subdataset: str) -> None:

30 super().__init__(path)

31 self.path = path

32 self.subdataset = subdataset

33

34 # ------------------------------------------------------------------------------------ #

35 # Loads the original CSV files to Pandas dataframes, #

36 # give them the appropriate format and finally save them to disk. #

37 # ------------------------------------------------------------------------------------ #

38 #

39 def load(self) -> None:

40 # SOME CONSIDERATIONS:

41 # - You can use Pandas utility read_csv()

42 # - Index must start at 1, not 0

43 # - Generate only 1 file for data and other for labels

44 # - Necessary a multi-index with two levels, an outer level of sequences and an inner level of sequences.

45 # - If there is both train and test data, each of them shall form a sequence.

46

47 # Iterate over files in the directory where the local original data is stored

48 for subdataset in os.listdir(self.path):

49 # ------------------------------------------------------------------------------------ #

50 # Read data CSV and generate dataframe

51 train_data = pd.read_csv(path.join(

52 self.path, subdataset, 'train_data.csv'), sep=',', header=None, names=self.EX_COL_NAMES)

53 test_data = pd.read_csv(path.join(

54 self.path, subdataset, 'test_data.csv'), sep=',', header=None, names=self.EX_COL_NAMES)

55

56 # Reset index for starting from 1 (Conmos format)

57 train_data.index += 1

58 test_data.index += 1

59

60 # Concatenate train and test data into 1 dataframe. (Always first train data)

61 # Time is and old name and needs to be upgraded but the purpose is the same as a normal Index

62 data = pd.concat([train_data, test_data], keys=[

63 1, 2], names=[Index.SEQUENCE, Index.TIME])

64

65 # Sort index after concatenate

66 data.sort_index(inplace=True)

67

68 # ------------------------------------------------------------------------------------ #

69 # Read labels CSV and generate dataframe

70 train_labels = pd.read_csv(path.join(

71 self.path, subdataset, 'train_labels.csv'), sep=',', header=None, names=[Label.ANOMALY])

72 test_labels = pd.read_csv(path.join(

73 self.path, subdataset, 'train_labels.csv'), sep=',', header=None, names=[Label.ANOMALY])

74

75 # Reset index for starting from 1 (Conmo's format)

76 train_labels.index += 1

77 test_labels.index += 1

78

79 # Concatenate train and test data into 1 dataframe. (Always first train data)

80 # Time is and old name and needs to be upgrade but the purpose is the same as a normal Index

81 labels = pd.concat([train_labels, test_labels], keys=[

82 1, 2], names=[Index.SEQUENCE, Index.TIME])

83

84 # Sort index after concatenate

85 labels.sort_index(inplace=True)

86

87 # ------------------------------------------------------------------------------------ #

88 # Finally save dataframes to disk in /home/{username}/conmo/data/... in parquet format

89 data.to_parquet(path.join(self.dataset_dir, '{}_{}'.format(

90 subdataset, File.DATA)), compression='gzip', index=True)

91 labels.to_parquet(path.join(self.dataset_dir, '{}_{}'.format(

92 subdataset, File.LABELS)), compression='gzip', index=True)

93

94 # ------------------------------------------------------------------------------------ #

95 # Method for adding to a list the different files that #

96 # belong to the dataset #

97 # Usually iterate over the subdatasets #

98 # ------------------------------------------------------------------------------------ #

99 #

100 def dataset_files(self) -> Iterable:

101 files = []

102 for key in self.EX_SUBDATASETS:

103 # Data

104 files.append(path.join(self.dataset_dir,

105 "{}_{}".format(key, File.DATA)))

106 # Labels

107 files.append(path.join(self.dataset_dir,

108 "{}_{}".format(key, File.LABELS)))

109 return files

110

111 # ------------------------------------------------------------------------------------ #

112 # Method for adding to pipeline step folder #

113 # Move from dataset_dir to out_dir data and labels #

114 # ------------------------------------------------------------------------------------ #

115 #

116 def feed_pipeline(self, out_dir: str) -> None:

117 # Data

118 shutil.copy(path.join(self.dataset_dir, "{}_{}".format(

119 self.subdataset, File.DATA)), path.join(out_dir, File.DATA))

120 # Labels

121 shutil.copy(path.join(self.dataset_dir, "{}_{}".format(

122 self.subdataset, File.LABELS)), path.join(out_dir, File.LABELS))

123

124 # ------------------------------------------------------------------------------------ #

125 # OPTIONAL: Only implement if you plan to use #

126 # PredefinedSplit method of Scikit-Learn library. #

127 # Returns indexes of sequences: #

128 # -1 -> if the sequence will be excluded on test set #

129 # 0 -> Test set #

130 # ------------------------------------------------------------------------------------ #

131 #

132 def sklearn_predefined_split(self) -> Iterable[int]:

133 return [-1, 0]

Once the class is ready, the respective import has to be added to the __init__ file and the class name to the __all__ list as follows:

1from conmo.datasets.mars_science_laboratory_mission import MarsScienceLaboratoryMission

2from conmo.datasets.nasa_turbofan_degradation import NASATurbofanDegradation

3from conmo.datasets.server_machine_dataset import ServerMachineDataset

4from conmo.datasets.soil_moisture_active_passive_satellite import SoilMoistureActivePassiveSatellite

5from conmo.datasets.batteries_degradation import BatteriesDataset

6#----------------------------------------

7# Add import to __init__ file of the module

8from conmo.datasets.csv_dataset import CSV_Dataset

9#----------------------------------------

10

11__all__ = [

12 'NASATurbofanDegradation'

13 'ServerMachineDataset'

14 'SoilMoistureActivePassiveSatellite'

15 'MarsScienceLaboratory'

16 'BatteriesDataset'

17 #----------------------------------------

18 # Add class name here

19 'CSV_Dataset'

20 #----------------------------------------

21]

Finally the dataset is ready to be used in an experiment:

1import os

2import sys

3

4# Add package to path (Uncomment only in case you have downloaded Conmo from github repository)

5sys.path.append(os.path.abspath(os.path.join(os.path.dirname(__file__), '..')))

6

7from sklearn.preprocessing import MinMaxScaler

8

9from conmo.experiment import Experiment, Pipeline

10from conmo.algorithms import OneClassSVM

11from conmo.datasets import CSV_Dataset

12from conmo.metrics import Accuracy

13from conmo.preprocesses import SklearnPreprocess

14from conmo.splitters import SklearnSplitter

15from sklearn.model_selection import PredefinedSplit

16from sklearn.preprocessing import MinMaxScaler

17

18# Pipeline definition

19dataset = CSV_Dataset('/home/lucas/conmo_test_csv', '01')

20splitter = SklearnSplitter(splitter=PredefinedSplit(dataset.sklearn_predefined_split()))

21preprocesses = [

22 SklearnPreprocess(to_data=True, to_labels=False,

23 test_set=True, preprocess=MinMaxScaler()),

24]

25algorithms = [

26 OneClassSVM()

27]

28metrics = [

29 Accuracy()

30]

31pipeline = Pipeline(dataset, splitter, preprocesses, algorithms, metrics)

32

33

34# Experiment definition and launch

35experiment = Experiment([pipeline], [])

36experiment.launch()

Coding conventions

The following tools are used to ensure that new software being added to Conmo meets minimum quality and format requirements:

Autopep8: We use this tool to automatically format our Python code to conform to the PEP 8 style guide. It uses the pycodestyle utility to determine what parts of the code needs to be formatted.

Isort: We use this library to sort imports alphabetically, and automatically separated into sections and by type.

Pytest: To ensure that the output format of a new designed step (algorithm, dataset, etc) is correct we use Pytest framework to testing the new code. This tetsing frameworks is easy to use and supoort complex testing at the same. At the moment we are finishing the implementation of tests on the existing code, so there could be parts that may be modified in future updates.